Concurrency Control:Insert and Delete Operations

Insert and Delete Operations

Until now, we have restricted our attention to read and write operations. This restriction limits transactions to data items already in the database. Some transactions require not only access to existing data items, but also the ability to create new data items. Others require the ability to delete data items. To examine how such transactions affect concurrency control, we introduce these additional operations:

• delete(Q) deletes data item Q from the database.

• insert(Q) inserts a new data item Q into the database and assigns Q an initial value.

An attempt by a transaction Ti to perform a read(Q) operation after Q has been deleted results in a logical error in Ti. Likewise, an attempt by a transaction Ti to perform a read(Q) operation before Q has been inserted results in a logical error in Ti. It is also a logical error to attempt to delete a nonexistent data item.

Deletion

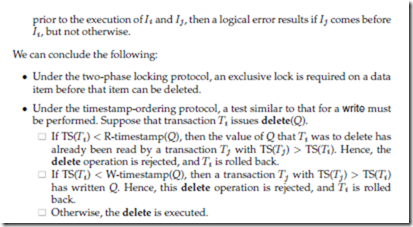

To understand how the presence of delete instructions affects concurrency control, we must decide when a delete instruction conflicts with another instruction. Let Ii and Ij be instructions of Ti and Tj , respectively, that appear in schedule S in consecutive order. Let Ii = delete(Q). We consider several instructions Ij .

Insertion

We have already seen that an insert(Q) operation conflicts with a delete(Q) operation. Similarly, insert(Q) conflicts with a read(Q) operation or a write(Q) operation; no read or write can be performed on a data item before it exists.

Since an insert(Q) assigns a value to data item Q, an insert is treated similarly to a write for concurrency-control purposes:

• Under the two-phase locking protocol, if Ti performs an insert(Q) operation, Ti is given an exclusive lock on the newly created data item Q.

• Under the timestamp-ordering protocol, if Ti performs an insert(Q) operation, the values R-timestamp(Q) and W-timestamp(Q) are set to TS(Ti).

The Phantom Phenomenon

Consider transaction T29 that executes the following SQL query on the bank database:

Let S be a schedule involving T29 and T30. We expect there to be potential for a conflict for the following reasons:

• If T29 uses the tuple newly inserted by T30 in computing sum(balance), then T29 read a value written by T30. Thus, in a serial schedule equivalent to S, T30 must come before T29.

• If T29 does not use the tuple newly inserted by T30 in computing sum(balance), then in a serial schedule equivalent to S, T29 must come before T30.

The second of these two cases is curious. T29 and T30 do not access any tuple in common, yet they conflict with each other! In effect, T29 and T30 conflict on a phantom tuple. If concurrency control is performed at the tuple granularity, this conflict would go undetected. This problem is called the phantom phenomenon.

To prevent the phantom phenomenon, we allow T29 to prevent other transactions from creating new tuples in the account relation with branch-name = “Perryridge.”

To find all account tuples with branch-name = “Perryridge”, T29 must search either the whole account relation, or at least an index on the relation. Up to now, we have assumed implicitly that the only data items accessed by a transaction are tuples. However, T29 is an example of a transaction that reads information about what tuples are in a relation, and T30 is an example of a transaction that updates that information.

Clearly, it is not sufficient merely to lock the tuples that are accessed; the information used to find the tuples that are accessed by the transaction must also be locked.

The simplest solution to this problem is to associate a data item with the relation; the data item represents the information used to find the tuples in the relation. Transactions, such as T29, that read the information about what tuples are in a relation would then have to lock the data item corresponding to the relation in shared mode.

Transactions, such as T30, that update the information about what tuples are in a relation would have to lock the data item in exclusive mode. Thus, T29 and T30 would conflict on a real data item, rather than on a phantom.

Do not confuse the locking of an entire relation, as in multiple granularity locking, with the locking of the data item corresponding to the relation. By locking the data item, a transaction only prevents other transactions from updating information about what tuples are in the relation. Locking is still required on tuples. A transaction that directly accesses a tuple can be granted a lock on the tuples even when another transaction has an exclusive lock on the data item corresponding to the relation itself.

The major disadvantage of locking a data item corresponding to the relation is the low degree of concurrency— two transactions that insert different tuples into a relation are prevented from executing concurrently.

A better solution is the index-locking technique. Any transaction that inserts atuple into a relation must insert information into every index maintained on the relation. We eliminate the phantom phenomenon by imposing a locking protocol for indices. For simplicity we shall only consider B+-tree indices.

As we saw in Chapter 12, every search-key value is associated with an index leaf node. A query will usually use one or more indices to access a relation. An insert must insert the new tuple in all indices on the relation. In our example, we assume that there is an index on account for branch-name. Then, T30 must modify the leaf containing the key Perryridge. If T29 reads the same leaf node to locate all tuples pertaining to the Perryridge branch, then T29 and T30 conflict on that leaf node.

The index-locking protocol takes advantage of the availability of indices on a relation, by turning instances of the phantom phenomenon into conflicts on locks on index leaf nodes. The protocol operates as follows:

• Every relation must have at least one index.

• A transaction Ti can access tuples of a relation only after first finding them through one or more of the indices on the relation.

• A transaction Ti that performs a lookup (whether a range lookup or a point lookup) must acquire a shared lock on all the index leaf nodes that it accesses.

• A transaction Ti may not insert, delete, or update a tuple ti in a relation r without updating all indices on r. The transaction must obtain exclusive locks on all index leaf nodes that are affected by the insertion, deletion, or update. For insertion and deletion, the leaf nodes affected are those that contain (after insertion) or contained (before deletion) the search-key value of the tuple. For updates, the leaf nodes affected are those that (before the modification) contained the old value of the search-key, and nodes that (after the modification) contain the new value of the search-key.

• The rules of the two-phase locking protocol must be observed.

Variants of the index-locking technique exist for eliminating the phantom phenomenon under the other concurrency-control protocols presented in this chapter.

Comments

Post a Comment