Join Operation

Join Operation

In this section, we study several algorithms for computing the join of relations, and we analyze their respective costs.

We use the term equi-join to refer to a join of the form r r.A=s.B s, where A and B are attributes or sets of attributes of relations r and s respectively.

We use as a running example the expression

Nested-Loop Join

Like the linear file-scan algorithm for selection, the nested-loop join algorithm requires no indices, and it can be used regardless of what the join condition is. Extending the algorithm to compute the natural join is straightforward, since the natural

join can be expressed as a theta join followed by elimination of repeated attributes by a projection. The only change required is an extra step of deleting repeated attributes from the tuple tr · ts, before adding it to the result.

The nested-loop join algorithm is expensive, since it examines every pair of tuples in the two relations. Consider the cost of the nested-loop join algorithm. The number of pairs of tuples to be considered is nr ∗ ns, where nr denotes the number of tuples in r, and ns denotes the number of tuples in s. For each record in r, we have to perform a complete scan on s. In the worst case, the buffer can hold only one block of each

relation, and a total of nr ∗ bs + br block accesses would be required, where br and bs denote the number of blocks containing tuples of r and s respectively. In the best case, there is enough space for both relations to fit simultaneously in memory, so each block would have to be read only once; hence, only br + bs block accesses would be required.

If one of the relations fits entirely in main memory, it is beneficial to use that relation as the inner relation, since the inner relation would then be read only once.

Therefore, if s is small enough to fit in main memory, our strategy requires only a total br + bs accesses — the same cost as that for the case where both relations fit in memory.

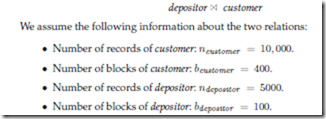

Now consider the natural join of depositor and customer. Assume for now that we have no indices whatsoever on either relation, and that we are not willing to create any index. We can use the nested loops to compute the join; assume that depositor is the outer relation and customer is the inner relation in the join. We will have to examine 5000 ∗ 10000 = 50 ∗ 106 pairs of tuples. In the worst case, the number of block accesses is 5000 ∗ 400 + 100 = 2,000,100. In the best-case scenario, however, we can read both relations only once, and perform the computation. This computation requires at most 100 + 400 = 500 block accesses — a significant improvement over the worst-case scenario. If we had used customer as the relation for the outer loop and depositor for the inner loop, the worst-case cost of our final strategy would have been lower: 10000 ∗ 100 + 400 = 1,000,400.

Block Nested-Loop Join

If the buffer is too small to hold either relation entirely in memory, we can still obtain a major saving in block accesses if we process the relations on a per-block basis, rather than on a per-tuple basis. Figure 13.5 shows block nested-loop join, which is a variant of the nested-loop join where every block of the inner relation is paired with every block of the outer relation. Within each pair of blocks, every tuple in one block

is paired with every tuple in the other block, to generate all pairs of tuples. As before, all pairs of tuples that satisfy the join condition are added to the result.

The primary difference in cost between the block nested-loop join and the basic nested-loop join is that, in the worst case, each block in the inner relation s is read only once for each block in the outer relation, instead of once for each tuple in the outer relation. Thus, in the worst case, there will be a total of br ∗ bs + br block accesses, where br and bs denote the number of blocks containing records of r and s respectively. Clearly, it is more efficient to use the smaller relation as the outer relation, in case neither of the relations fits in memory. In the best case, there will be br + bs block accesses.

Now return to our example of computing depositor customer, using the block nested-loop join algorithm. In the worst case we have to read each block of customer once for each block of depositor. Thus, in the worst case, a total of 100 ∗ 400 + 100 =40, 100 block accesses are required. This cost is a significant improvement over the 5000 ∗ 400 + 100 = 2, 000, 100 block accesses needed in the worst case for the basic nested-loop join. The number of block accesses in the best case remains the same — namely, 100 + 400 = 500.

The performance of the nested-loop and block nested-loop procedures can be further improved:

• If the join attributes in a natural join or an equi-join form a key on the inner relation, then for each outer relation tuple the inner loop can terminate as soon as the first match is found.

• In the block nested-loop algorithm, instead of using disk blocks as the blocking unit for the outer relation, we can use the biggest size that can fit in memory, while leaving enough space for the buffers of the inner relation and the output. In other words, if memory has M blocks, we read in M − 2 blocks

of the outer relation at a time, and when we read each block of the inner re- lation we join it with all the M − 2 blocks of the outer relation. This change reduces the number of scans of the inner relation from br to pbr /(M − 2)l, where br is the number of blocks of the outer relation. The total cost is then pbr /(M − 2)l∗ bs + br .

• We can scan the inner loop alternately forward and backward. This scanning method orders the requests for disk blocks so that the data remaining in the buffer from the previous scan can be reused, thus reducing the number of disk accesses needed.

• If an index is available on the inner loop’s join attribute, we can replace file scans with more efficient index lookups. Section 13.5.3 describes this optimization.

Indexed Nested-Loop Join

In a nested-loop join (Figure 13.4), if an index is available on the inner loop’s join attribute, index lookups can replace file scans. For each tuple tr in the outer relation r, the index is used to look up tuples in s that will satisfy the join condition with tuple tr .

This join method is called an indexed nested-loop join; it can be used with existing indices, as well as with temporary indices created for the sole purpose of evaluating the join.

Looking up tuples in s that will satisfy the join conditions with a given tuple tr is essentially a selection on s. For example, consider depositor customer. Suppose that we have a depositor tuple with customer-name “John”. Then, the relevant tuples in s are those that satisfy the selection “customer-name = John”.

The cost of an indexed nested-loop join can be computed as follows. For each tuple in the outer relation r, a lookup is performed on the index for s, and the relevant tuples are retrieved. In the worst case, there is space in the buffer for only one page of r and one page of the index. Then, br disk accesses are needed to read relation r, where br denotes the number of blocks containing records of r. For each tuple in r, we perform an index lookup on s. Then, the cost of the join can be computed as br + nr ∗ c, where nr is the number of records in relation r, and c is the cost of a single selection on s using the join condition. We have seen in Section 13.3 how to estimate the cost of a single selection algorithm (possibly using indices); that estimate gives us the value of c.

The cost formula indicates that, if indices are available on both relations r and s, it is generally most efficient to use the one with fewer tuples as the outer relation.

For example, consider an indexed nested-loop join of depositor customer, with depositor as the outer relation. Suppose also that customer has a primary B+-tree index on the join attribute customer-name, which contains 20 entries on an average in each index node. Since customer has 10,000 tuples, the height of the tree is 4, and one more access is needed to find the actual data. Since ndepositor is 5000, the total cost is 100 + 5000 ∗ 5 = 25, 100 disk accesses. This cost is lower than the 40, 100 accesses needed for a block nested-loop join.

Merge Join

The merge join algorithm (also called the sort – merge join algorithm) can be used to compute natural joins and equi-joins. Let r(R) and s(S) be the relations whose natural join is to be computed, and let R∩S denote their common attributes. Suppose

that both relations are sorted on the attributes R ∩S. Then, their join can be computed by a process much like the merge stage in the merge – sort algorithm.

Figure 13.6 shows the merge join algorithm. In the algorithm, JoinAttrs refers to the attributes in R ∩ S, and tr ts, where tr and ts are tuples that have the same values for JoinAttrs, denotes the concatenation of the attributes of the tuples, followed by projecting out repeated attributes. The merge join algorithm associates one pointer with each relation. These pointers point initially to the first tuple of the respective relations. As the algorithm proceeds, the pointers move through the relation. A group of tuples of one relation with the same value on the join attributes is read into Ss.

The algorithm in Figure 13.6 requires that every set of tuples Ss fit in main memory; we shall look at extensions of the algorithm to avoid this requirement later in this section. Then, the corresponding tuples (if any) of the other relation are read in, and are processed as they are read.

Figure 13.7 shows two relations that are sorted on their join attribute a1. It is in- structive to go through the steps of the merge join algorithm on the relations shown in the figure.

Since the relations are in sorted order, tuples with the same value on the join attributes are in consecutive order. Thereby, each tuple in the sorted order needs to be read only once, and, as a result, each block is also read only once. Since it makes only a single pass through both files, the merge join method is efficient; the number of block accesses is equal to the sum of the number of blocks in both files, br + bs.

If either of the input relations r and s is not sorted on the join attributes, they can be sorted first, and then the merge join algorithm can be used. The merge join algorithm can also be easily extended from natural joins to the more general case of equi-joins.

Suppose the merge join scheme is applied to our example of depositor customer.

The join attribute here is customer-name. Suppose that the relations are already sorted on the join attribute customer-name. In this case, the merge join takes a total of 400 + 100 = 500 block accesses. Suppose the relations are not sorted, and the memory size is the worst case of three blocks. Sorting customer takes 400 ∗ (2plog2(400/3)l + 1), or 6800, block transfers, with 400 more transfers to write out the result. Similarly, sorting depositor takes 100 ∗ (2plog2(100/3)l + 1), or 1300, transfers, with 100 more transfers to write it out. Thus, the total cost is 9100 block transfers if the relations are not sorted, and the memory size is just 3 blocks.

With a memory size of 25 blocks, sorting the relation customer takes a total of just 400 ∗ (2plog24(400/25) + 1) = 1200 block transfers, while sorting depositor takes 300 block transfers. Adding the cost of writing out the sorted results and reading them back gives a total cost of 2500 block transfers if the relations are not sorted and the memory size is 25 blocks.

As mentioned earlier, the merge join algorithm of Figure 13.6 requires that the set Ss of all tuples with the same value for the join attributes must fit in main memory.

This requirement can usually be met, even if the relation s is large. If it cannot be met, a block nested-loop join must be performed between Ss and the tuples in r with the same values for the join attributes. The overall cost of the merge join increases as a result.

It is also possible to perform a variation of the merge join operation on unsorted tuples, if secondary indices exist on both join attributes. The algorithm scans the records through the indices, resulting in their being retrieved in sorted order. This variation presents a significant drawback, however, since records may be scattered throughout the file blocks. Hence, each tuple access could involve accessing a disk block, and that is costly.

To avoid this cost, we can use a hybrid merge – join technique, which combines indices with merge join. Suppose that one of the relations is sorted; the other is un- sorted, but has a secondary B+-tree index on the join attributes. The hybrid merge – join algorithm merges the sorted relation with the leaf entries of the secondary B+- tree index. The result file contains tuples from the sorted relation and addresses for tuples of the unsorted relation. The result file is then sorted on the addresses of tuples of the unsorted relation, allowing efficient retrieval of the corresponding tuples, in physical storage order, to complete the join. Extensions of the technique to handle two unsorted relations are left as an exercise for you.

Hash Join

Like the merge join algorithm, the hash join algorithm can be used to implement natural joins and equi-joins. In the hash join algorithm, a hash function h is used to partition tuples of both relations. The basic idea is to partition the tuples of each of the relations into sets that have the same hash value on the join attributes.

We assume that

The hash function h should have the “goodness” properties of randomness and uniformity that we discussed in Chapter 12. Figure 13.8 depicts the partitioning of the relations.

The idea behind the hash join algorithm is this: Suppose that an r tuple and an s tuple satisfy the join condition; then, they will have the same value for the join attributes. If that value is hashed to some value i, the r tuple has to be in Hri and the s tuple in Hsi . Therefore, r tuples in Hri need only to be compared with s tuples in Hsi ; they do not need to be compared with s tuples in any other partition.

For example, if d is a tuple in depositor, c a tuple in customer, and h a hash function on the customer-name attributes of the tuples, then d and c must be tested only if

h(c)= h(d). If h(c) j= h(d), then c and d must have different values for customer-name. However, if h(c) = h(d), we must test c and d to see whether the values in their join attributes are the same, since it is possible that c and d have different customer-names that have the same hash value.

Figure 13.9 shows the details of the hash join algorithm to compute the natural join of relations r and s. As in the merge join algorithm, tr ts denotes the concatenation of the attributes of tuples tr and ts, followed by projecting out repeated at- tributes. After the partitioning of the relations, the rest of the hash join code performs a separate indexed nested-loop join on each of the partition pairs i, for i = 0,... , nh. To do so, it first builds a hash index on each Hsi , and then probes (that is, looks up Hsi ) with tuples from Hri . The relation s is the build input, and r is the probe input.

The hash index on Hsi is built in memory, so there is no need to access the disk to retrieve the tuples. The hash function used to build this hash index is different from the hash function h used earlier, but is still applied to only the join attributes. In the course of the indexed nested-loop join, the system uses this hash index to retrieve records that will match records in the probe input.

The build and probe phases require only a single pass through both the build and probe inputs. It is straightforward to extend the hash join algorithm to compute general equi-joins.

The value nh must be chosen to be large enough such that, for each i, the tuples in the partition Hsi of the build relation, along with the hash index on the partition, will fit in memory. It is not necessary for the partitions of the probe relation to fit in memory. Clearly, it is best to use the smaller input relation as the build relation. If the size of the build relation is bs blocks, then, for each of the nh partitions to be of size less than or equal to M , nh must be at least pbs/M l. More precisely stated, we have

to account for the extra space occupied by the hash index on the partition as well, so nh should be correspondingly larger. For simplicity, we sometimes ignore the space requirement of the hash index in our analysis.

Recursive Partitioning

If the value of nh is greater than or equal to the number of page frames of memory, the relations cannot be partitioned in one pass, since there will not be enough buffer pages. Instead, partitioning has to be done in repeated passes. In one pass, the input can be split into at most as many partitions as there are page frames available for use as output buffers. Each bucket generated by one pass is separately read in and partitioned again in the next pass, to create smaller partitions. The hash function used in a pass is, of course, different from the one used in the previous pass. The system repeats this splitting of the input until each partition of the build input fits in memory. Such partitioning is called recursive partitioning.

A relation does not need recursive partitioning if M > nh +1, or equivalently M > (bs/M ) + 1, which simplifies (approximately) to M > √bs. For example, consider a memory size of 12 megabytes, divided into 4-kilobyte blocks; it would contain a total of 3000 blocks. We can use a memory of this size to partition relations of size 9 million blocks, which is 36 gigabytes. Similarly, a relation of size 1 gigabyte requires √250000 blocks, or about 2 megabytes, to avoid recursive partitioning.

Handling of Overflows

Hash-table overflow occurs in partition i of the build relation s if the hash index on Hsi is larger than main memory. Hash-table overflow can occur if there are many tuples in the build relation with the same values for the join attributes, or if the hash function does not have the properties of randomness and uniformity. In either case, some of the partitions will have more tuples than the average, whereas others will have fewer; partitioning is then said to be skewed.

We can handle a small amount of skew by increasing the number of partitions so that the expected size of each partition (including the hash index on the partition) is somewhat less than the size of memory. The number of partitions is therefore in- creased by a small value called the fudge factor, which is usually about 20 percent of the number of hash partitions computed as described in Section 13.5.5.

Even if we are conservative on the sizes of the partitions, by using a fudge factor, overflows can still occur. Hash-table overflows can be handled by either overflow resolution or overflow avoidance. Overflow resolution is performed during the build phase, if a hash-index overflow is detected. Overflow resolution proceeds in this way: If Hsi , for any i, is found to be too large, it is further partitioned into smaller partitions by using a different hash function. Similarly, Hri is also partitioned using the new hash function, and only tuples in the matching partitions need to be joined.

In contrast, overflow avoidance performs the partitioning carefully, so that over- flows never occur during the build phase. In overflow avoidance, the build relation s is initially partitioned into many small partitions, and then some partitions are com- bined in such a way that each combined partition fits in memory. The probe relation r is partitioned in the same way as the combined partitions on s, but the sizes of Hri do not matter.

If a large number of tuples in s have the same value for the join attributes, the resolution and avoidance techniques may fail on some partitions. In that case, instead of creating an in-memory hash index and using a nested-loop join to join the partitions, we can use other join techniques, such as block nested-loop join, on those partitions.

Cost of Hash Join

We now consider the cost of a hash join. Our analysis assumes that there is no hash- table overflow. First, consider the case where recursive partitioning is not required. The partitioning of the two relations r and s calls for a complete reading of both rela- tions, and a subsequent writing back of them. This operation requires 2(br + bs) block accesses, where br and bs denote the number of blocks containing records of relations r and s respectively. The build and probe phases read each of the partitions once, call- ing for a further br + bs accesses. The number of blocks occupied by partitions could be slightly more than br + bs, as a result of partially filled blocks. Accessing such par- tially filled blocks can add an overhead of at most 2nh for each of the relations, since each of the nh partitions could have a partially filled block that has to be written and read back. Thus, the cost estimate for a hash join is

3(br + bs)+ 4nh

The overhead 4nh is quite small compared to br + bs, and can be ignored.

Now consider the case where recursive partitioning is required. Each pass reduces the size of each of the partitions by an expected factor of M − 1; and passes are repeated until each partition is of size at most M blocks. The expected number of passes required for partitioning s is therefore plogM −1(bs) − 1l. Since, in each pass, every block of s is read in and written out, the total block transfers for partitioning of s is 2bsplogM −1(bs) − 1l. The number of passes for partitioning of r is the same as the number of passes for partitioning of s, therefore the cost estimate for the join is

2(br + bs)plogM −1(bs) − 1l + br + bs

Consider, for example, the join customer depositor. With a memory size of 20 blocks, depositor can be partitioned into five partitions, each of size 20 blocks, which size will fit into memory. Only one pass is required for the partitioning. The relation customer is similarly partitioned into five partitions, each of size 80. Ignoring the cost of writing partially filled blocks, the cost is 3(100 + 400) = 1500 block transfers.

The hash join can be improved if the main memory size is large. When the entire build input can be kept in main memory, nh can be set to 0; then, the hash join algorithm executes quickly, without partitioning the relations into temporary files, regardless of the probe input’s size. The cost estimate goes down to br + bs.

Hybrid Hash – Join

The hybrid hash – join algorithm performs another optimization; it is useful when memory sizes are relatively large, but not all of the build relation fits in memory. The partitioning phase of the hash join algorithm needs one block of memory as a buffer for each partition that is created, and one block of memory as an input buffer. Hence, a total of nh +1 blocks of memory are needed for the partitioning the two relations.

If memory is larger than nh + 1, we can use the rest of memory (M − nh − 1 blocks) to buffer the first partition of the build input (that is, Hs0 ), so that it will not need to be written out and read back in. Further, the hash function is designed in such a way that the hash index on Hs0 fits in M − nh − 1 blocks, in order that, at the end of partitioning of s, Hs0 is completely in memory and a hash index can be built on Hs0 .

When the system partitions r it again does not write tuples in Hr0 to disk; instead, as it generates them, the system uses them to probe the memory-resident hash index on Hs0 , and to generate output tuples of the join. After they are used for probing, the tuples can be discarded, so the partition Hr0 does not occupy any memory space. Thus, a write and a read access have been saved for each block of both Hr0 and Hs0 . The system writes out tuples in the other partitions as usual, and joins them later.

The savings of hybrid hash – join can be significant if the build input is only slightly bigger than memory.

If the size of the build relation is bs, nh is approximately equal to bs/M . Thus, hybrid hash – join is most useful if M >> bs/M , or M >> √bs, where the notation >> denotes much larger than. For example, suppose the block size is 4 kilobytes, and the build relation size is 1 gigabyte. Then, the hybrid hash – join algorithm is useful if the size of memory is significantly more than 2 megabytes; memory sizes of 100 megabytes or more are common on computers today.

Consider the join customer depositor again. With a memory size of 25 blocks, depositor can be partitioned into five partitions, each of size 20 blocks, and the first of the partitions of the build relation can be kept in memory. It occupies 20 blocks of memory; one block is for input and one block each is for buffering the other four partitions. The relation customer can be similarly partitioned into five partitions each of size 80, the first of which the system uses right away for probing, instead of writing it out and reading it back in. Ignoring the cost of writing partially filled blocks, the cost is 3(80 + 320) + 20 + 80 = 1300 block transfers, instead of 1500 block transfers without the hybrid hashing optimization.

Complex Joins

Nested-loop and block nested-loop joins can be used regardless of the join conditions. The other join techniques are more efficient than the nested-loop join and its variants, but can handle only simple join conditions, such as natural joins or equijoins. We can implement joins with complex join conditions, such as conjunctions and disjunctions, by using the efficient join techniques, if we apply the techniques developed in Section 13.3.4 for handling complex selections.

Consider the following join with a conjunctive condition:

One or more of the join techniques described earlier may be applicable for joins on the individual conditions rs, and so on. We can compute the overall join by first computing the result of one of these simpler joins r pair of tuples in the intermediate result consists of one tuple from r and one from s.

The result of the complete join consists of those tuples in the intermediate result that satisfy the remaining conditions

These conditions can be tested as tuples in rs are being generated.

A join whose condition is disjunctive can be computed in this way: Consider

The join can be computed as the union of the records in individual joins r Section 13.6 describes algorithms for computing the union of relations.

Comments

Post a Comment